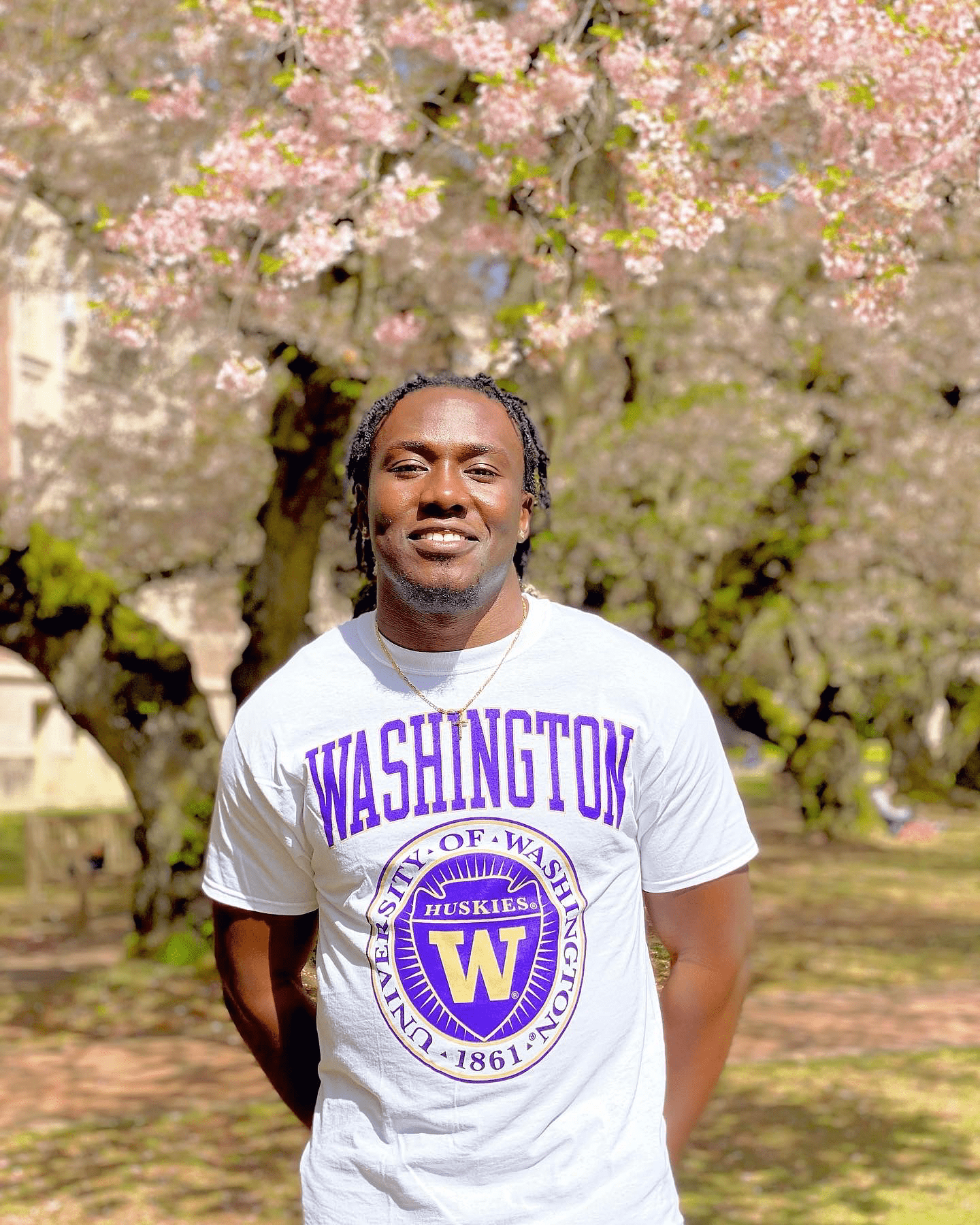

Responsible AI Research Scientist

I am a fourth-year Ph.D. candidate and GEM Fellow in the Department of Human-Centered Design and Engineering at the University of Washington, specializing in Human-Computer Interaction with a focus on AI fairness. I am a member of the Tactile and Tactical (TAT) Design Lab, under the guidance of Daniela Rosner, and the Wildlab, where I am also advised by Katharina Reinecke. My work across these labs focuses on exploring design, technology, and social impact through interdisciplinary research.

I am deeply invested in promoting responsible AI/ML systems that prioritize fairness, inclusivity, and equity. My research investigates the sociotechnical implications of race and culture especially in how intelligent systems and automated language technologies interact with underrepresented communities. Leveraging inclusive design and justice-driven frameworks, I aim to enhance user experiences with a particular emphasis on Black American communities.

Research Interests

- Sociotechnical Implications of AI Technology

- Human-Computer Interaction

- Responsible AI

- Inclusive Design

- Data-Driven Solutions to Mitigate Racial Biases